Designing Formative Assessment That Improves Teaching and Learning: What Can Be Learned from the Design Stories of Experienced Teachers?

This article reports on findings of a qualitative study that investigated the difficulties teachers encounter while designing formative assessment plans and the strategies experienced teachers use to avoid those pitfalls. The pitfalls were identified through an analysis of formative assessment plans that searched for potential threats to alignment, decision-driven data collection, and room for adjustment and improvement. The main pitfalls in the design process occurred when teachers did not explicitly and coherently link all elements of their formative assessment plan or when they did not plan to effectively use information about student learning to improve teaching and learning. Through interviews with experienced teachers, we identified seven design strategies they used to design formative assessment plans that were aligned, consisted of decision-driven data collection, and left room for adjustment and improvement. However, these experienced teachers still encountered difficulties in determining how to formulate the right decisions for decision-driven data collection and how to provide students with enough room for improvement. Lessons learned from the design strategies of these experienced teachers are incorporated in design steps for formative assessment plans all teachers can use.

Avoid common mistakes on your manuscript.

Formative assessment can be seen as an ongoing process of monitoring students’ learning to decide which teaching and learning actions should be taken to better suit students’ needs (Allal, 2020; Black & Wiliam, 2009). Activities that are part of effective formative assessment include clarifying expectations, eliciting and analyzing evidence of student learning, communicating the outcomes with students, and performing suitable follow-up activities in teaching and learning (Antoniou & James, 2014; Ruiz-Primo & Furtak, 2007; Veugen et al., 2021). Formative assessment reveals students’ learning progress and what is needed to further this learning. Teachers can use this information to make better informed formative decisions about the next steps in teaching (Black & Wiliam, 2009).

Since formative assessment strengthens the connection between teaching and learning, it can be a solid intervention for improving both. However, implementing formative assessment that “works” is challenging for teachers. Research describes many pitfalls teachers can encounter when implementing formative assessment. For example, studies that investigated the implementation of formative assessment in practice conclude that in order to be effective, there needs to be more consideration for the integration, coherency, and alignment of formative assessment in classroom practice (Gulikers et al., 2013; Van Den Berg, 2018; Wylie & Lyon, 2015). Formative assessment should be aligned with learning objectives, lesson activities, and other assessment activities (Biggs, 1996; Gulikers et al., 2013). Moreover, since learning objectives often exceed lessons, this alignment of formative assessment should even be considered for multiple related lessons.

Additionally, Wiliam (2013, 2014) states that formative assessment activities that elicit evidence about student learning should also be designed in alignment with the decisions teachers wish to make based on the outcomes of these activities. Therefore, he recommends decision-driven data collection to ensure teachers and students receive the timely information they need to make well-informed formative decisions about the next steps in teaching and learning (Wiliam, 2013). However, teachers do not always incorporate decision-driven data collection in formative assessment, and this can be a pitfall when, for instance, existing data on student learning does not represent the current situation of learners or comes too late for a meaningful follow-up (Wiliam, 2013).

A recent study conducted by Veugen et al. (2021) examined students’ and teachers’ perceptions of formative assessment practice and revealed a final example of difficulties teachers seem to encounter when they implement formative assessment. Veugen et al. found that teachers who implement formative assessment use activities that clarify expectations and elicit and analyze student reactions. This results in feedback for the students, but the teachers report few adaptations to teaching and learning based on the outcomes of these activities. Without such follow-up activities, it is unlikely that formative assessment enhances student learning because students do not get the opportunity to use the feedback they were given, and teachers do not get the opportunity to adapt their teaching to students’ needs (Black & Wiliam, 2009; Veugen et al., 2021). Formative assessment is not complete without a follow-up where students and teachers have room for adjustment and improvement. This room can be created in lessons that follow the analysis and communication of evidence of student learning. In summary, teachers encounter a range of difficulties when implementing formative assessment. Formative assessment should be aligned, include decision-driven data collection, and leave room for adjustment and improvement but, in practice, these criteria are rarely met.

It seems to be a complex task for teachers to consider these three criteria when conducting formative assessment. As a result, some teachers succeed in enacting formative assessment as recommended in the literature, while others experience more difficulties in reaching this goal (Offerdahl et al., 2018; Veugen et al., 2021). Previous research suggests that pre-planning formative assessment is fundamental for teachers to ensure the effectiveness of these activities by encompassing all essential characteristics (van der Steen et al., 2022). So far, literature focusing on designing formative assessment has concentrated mainly on designing individual formative assessment activities (Furtak et al., 2018). However, only when teachers design formative assessment in plans that encompass multiple lessons can they tackle difficulties such as alignment and planning follow-up activities in an effective and feasible way (van der Steen et al., 2022; Wiliam, 2013). Taking a broad view of multiple lessons helps teachers consider the alignment between all lessons and activities that contribute to achieving the intended learning objectives. Furthermore, it increases their opportunities to plan for room for adjustment and improvement.

Based on the outcomes of earlier research (van der Steen et al., 2022), 64 teachers from four secondary schools were given time and knowledge to help them design formative assessment plans that met the criteria: alignment, decision-driven data collection, and room for adjustment and improvement. Still, even in this context, differences emerged between teachers when they were designing formative assessment. It seemed that teachers who already had experience with formative assessment in their classroom had an advantage in successfully designing formative assessment plans over teachers who did not yet have this experience.

Since there is a lack of literature about how teachers design formative assessment plans (van der Steen et al., 2022), it is unclear how teachers who design and implement formative assessment successfully design their formative assessment plans. Which design strategies do they use, and what can other teachers learn from their experiences? Therefore, the present study focuses on how experienced teachers design formative assessment plans aligned with learning objectives, lessons, and prospective formative decisions while taking follow-up actions into account. Once it becomes clear how teachers design such formative assessment plans, this knowledge can be used to support teachers who struggle with implementing formative assessment as intended. Therefore, the outcomes of this study will result in design steps and strategies for all teachers.

Accordingly, the research question for this study is:

How do experienced teachers design formative assessment plans that are aligned, include decision-driven data collection, and leave room for adjusting and improving teaching and learning?

Sub-questions that help answer this research question are:

The context of this study is an educational design research project funded by a grant which provided four secondary schools with the opportunity to advance formative assessment in their schools. At these schools, teachers designed formative assessment plans in teacher learning communities. Teacher learning communities are groups of teachers that come together for sustained periods of time — in this case, 15 meetings during a 16-month period — to engage in inquiry and problem solving with the goal of improving student learning (Van Es, 2012). The teacher learning communities in this study focused on improving formative assessment and formative decision-making by designing formative assessment plans. The activities in the teacher learning communities were coordinated by the first author, who provided the teachers with information and support in designing formative assessment plans.

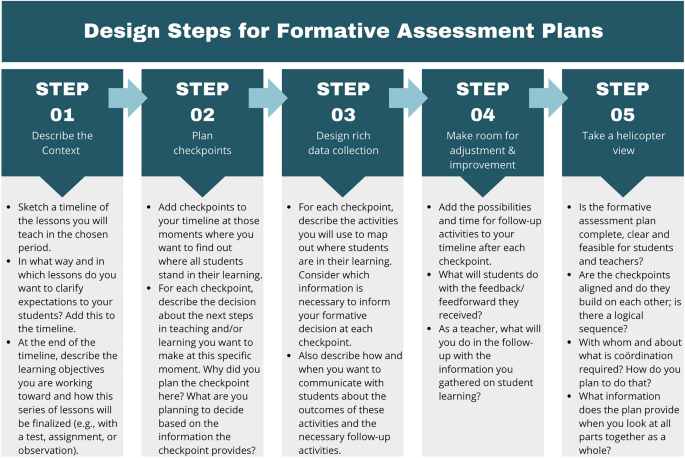

Each school had a teacher learning community that consisted of 11 to 24 teachers — a total of 64 teachers across the four schools — who designed formative assessment plans for their lessons according to five design steps (Fig. 1). These five design steps are based on design principles for formative assessment plans that meet the three quality criteria: alignment (design steps 1 and 5), decision-driven data collection (design steps 2, 3, and 5), and room for adjustment and improvement (design step 4) (van der Steen et al., 2022).

During a previous design cycle, teachers used an earlier version of the design steps for the first time. Thus, most teachers had experience designing a formative assessment plan prior to this study. The design steps were evaluated and adjusted based on group interviews and an analysis of the formative assessment plans designed during that first design cycle. The adjustments mainly concentrated on making the design steps more concise and emphasizing, within the design steps, the importance of communication with students, the link with formative decision-making, and planning room for students and teachers to improve.

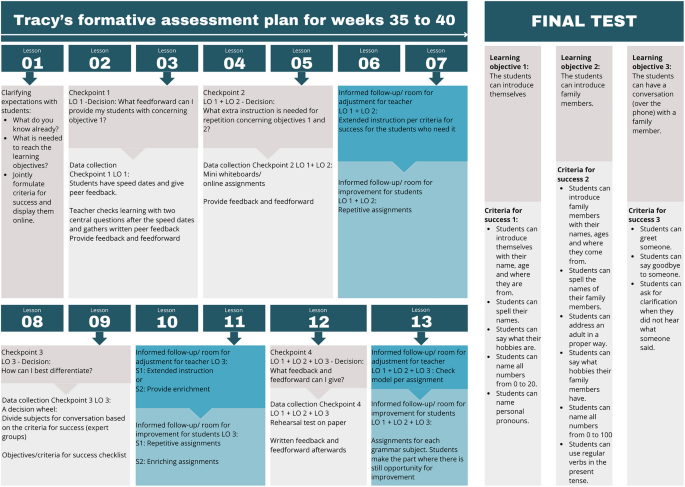

In this study, the formative assessment plans the teachers designed and design stories of experienced teachers are used to answer the research questions. Figure 2 shows an example of a formative assessment plan.

This study has a qualitative research design. The first sub-question will be answered by analyzing the collected formative assessment plans for the presence and different appearances of alignment, decision-driven data collection, and room for adjustment and improvement, together with the pitfalls that prevent formative assessment plans from meeting these criteria. The second sub-question about what experienced teachers do to avoid these pitfalls will be answered based on interviews with experienced teachers who participated in this project.

Thirty-one teachers of 15 subjects from the four participating secondary schools were involved in answering sub-question 1 (see Table 1). All teachers (n = 64) that participated in the teacher learning communities at one of the four schools were asked if they could send their formative assessment plans (if they had finalized their plan by that time). To get a representative and information-rich overview of the pitfalls teachers encounter while designing formative assessment, despite experience in teaching and formative assessment or subjects taught, the researchers aimed to gather all finished formative assessment plans. This request resulted in 26 formative assessment plans from 31 teachers (presented in Table 1).

Table 1 Overview of collected formative assessment plans (n = 26)To answer sub-question 2, experienced teachers were recruited from the participating schools. To ensure the interviews provided in-depth information for this study, the teachers had to be actively involved in the design project and have multiple years of experience with formative assessment so they could really understand and explain their choices and considerations in the design process.

All four participating schools were asked to find two teachers for the interviews who (1) agreed to contribute to this research via interviews (2) had finished designing their second formative assessment plan with success and (3) had experience with formative assessment prior to the start of teacher learning communities. For one school at which working with formative assessment was relatively new, no teachers that met these criteria could be found. The other three schools did find two teachers, as presented in Table 2 (all names are pseudonyms).

Table 2 All interviewed teachers and the subjects they teachThe table does not show the schools at which these teachers are employed, and a more general description was chosen for the language teachers to ensure their anonymity.

The 26 formative assessment plans came from 15 subjects and all four schools, varying from five to eight plans per school. That variety makes it likely that this collection of plans can provide a representative sample of formative assessment plans designed based on the five design steps, so there was no need to gather more plans.

Before analyzing the plans to answer sub-question 1, a description was made of the criteria each element must meet to receive a positive evaluation. These were as follows:

The first step in analyzing the formative assessment plans was evaluating the plans on the three criteria: alignment, decision-driven data collection, and room for adjustment and improvement. This analysis was conducted by two researchers individually: the first author and one colleague researcher.

Second, for each criterion, the researchers discussed the differences in appreciation of the quality of the plans before they addressed the differences and similarities between the plans that succeeded in meeting the criterion and the plans that had not. What pitfalls appear in the plans that did not meet a criterion, and what can be learned from the plans that did? Some plans were more elaborately described and explained than others. Therefore, the results in this study are an analysis of the pitfalls in the plans with a clear and elaborate description and the possible pitfalls in the less well described plans.

Semi-structured teacher interviews were used to answer sub-question 2 and gain deep insight into the steps experienced teachers take to design their formative assessment plans. What did they do in addition to or differently from the five design steps, and how did this contribute to meeting the three quality criteria for formative assessment plans (sub-question 2)?

In the interviews, teachers were asked about their design process. Each interview started with the question: “How did designing this formative assessment plan come about?” Possible follow-up questions were: (a) “What did you do?,” (b) “Which steps did you take in designing this plan?,” and (c) “What difficulties did you encounter in designing this plan, and how did you resolve them?”

After the teachers explained their design process in their own words, the conversation turned to comparing the design steps to the design story the teacher had just shared. Where had the teacher followed the five design steps, and where did their process differ? For example, the teachers were asked about their choices in step 2 of the design process about when and why they had planned checkpoints, what they chose to do at each checkpoint to elicit information about student learning, and whether they had linked checkpoints together.

The interviews were transcribed and coded through template analysis (Brooks et al., 2015). The statements and comments about the teachers’ decisions and actions during the process of designing their formative assessment plan were coded using the five design steps (Fig. 1) and put into a narrative for each teacher. Based on each narrative, the researchers used the teachers’ choices and actions that contributed to alignment, decision-driven data collection, and room for adjustment and improvement to answer the questions about what experienced teachers do in their design process to meet the three criteria this study focuses on (sub-questions 2a, 2b, and 2c).

Sub-question 1 was: “What are pitfalls that can threaten the design of formative assessment plans that are aligned, include decision-driven data collection, and leave room for adjusting and improving teaching and learning?” The results from analyzing the formative assessment plans are presented per criterion.

The main pitfalls related to alignment were:

The three main pitfalls related to decision-driven data collection were:

The three pitfalls related to room for adjustment and improvement were:

We used the narratives of six teachers (as presented in Online Resource 1) to answer sub-question 2: “How do experienced teachers design formative assessment plans that are aligned with learning objectives, lesson activities, and other assessment, include decision-driven data collection, and leave room for adjusting and improving teaching and learning?” The results are presented per criteria.

All the teachers started with an existing series of lessons. According to Tracy, this is a coherent foundation from which to start when planning formative assessment if those lessons were designed based on the desired learning objectives (as they were in these cases). Tracy started with a series of lessons that also was aligned externally with an annual schedule that included all learning objectives and criteria for success and was consistent and aligned with the years ahead of or behind the current class. However, Patricia added that these planned series of lessons are not fixed. She stated that aligning formative assessment with existing lessons and lesson activities is an active process of determining whether lesson planning requires something different based on the choices made in designing formative assessment and simultaneously determining how formative assessment can enhance learning.

All the teachers described continuously checking for alignment during the design process, looking at the learning objectives, (formative) assessment, and lesson activities collectively. Stuart even believes checking for coherency and alignment should continue after the design process during and after the execution of the formative assessment plan: “Only then can you really fly over it and notice whether the cohesion is good enough.”

All the teachers made sure they understood the learning objectives by taking time to zoom in and formulate criteria for success and/or to zoom out to look for overarching learning objectives to bring everything together. Patricia, Stuart, and Jenna took time to look closely at learning objectives, transcend specifics, discover the coherence between objectives, or find broader objectives that can connect different learning objectives. Another group of teachers (Tracy, Stuart, Bernadette, and Lisa) took the time to formulate criteria for success so objectives would be more specific for teachers and students. Stuart analyzed former lessons, assignments, and previous misconceptions to get a good idea of the criteria for success that should be pursued. Lisa and Tracy formulated these criteria for success together with their students based on examples of work. Patricia, Jenna, and Tracy all mentioned that they think zooming in and out on learning objectives with colleagues is a valuable part of the design process.

After ensuring they comprehended the learning objectives, the teachers planned checkpoints that covered all the learning objectives (five teachers) or a selection of the learning objectives (one teacher). These checkpoints were linked explicitly to the learning objectives. Tracy clearly listed the learning objectives in her formative assessment plan to continuously verify that all activities aligned with what she wanted to accomplish with her class. For Stuart, it was essential to determine which learning objectives and criteria for success were being targeted in each lesson so he could refer to them in his instructions and assignments to the students. This will make it easier for students to reflect on their learning based on these learning objectives and criteria for success because they will be present in each lesson.

Most teachers designed their formative assessment to make it easy to integrate into their existing lesson plans. For example, Tracy said: “I actually really looked at which assignments I use to get them to practice and, based on those assignments, I decided which data collection activity would fit in easily.” Patricia planned activities as not only a means to collect data on students’ learning but also as an opportunity for students to repeat and rehearse for the selected learning objectives. Stuart and Lisa added that they designed formative assessment to collect data on learning as they would design test items for the final test.

The teachers wanted to use the information gathered at the checkpoints to make three decisions:

Sometimes the information gathered at one checkpoint applied to several of these decisions.

When designing decision-driven data collection, the teachers were primarily concerned with how to measure what they needed to know to inform the next formative decision. For example, Lisa planned to use a drawing instead of a question to allow every student to show their learning on the topic unimpeded by their writing skills. Stuart did not want to analyze reflection forms since they only demonstrate students’ perceptions of learning, not their progress. Likewise, Jenna did not want to analyze summaries since she does not think they illustrate what students have learned but only whether they can summarize.

Stuart added that he plans decision-driven data collection through exit tickets or mini whiteboards because this gives him more information about all students’ learning than he could acquire by walking around while students do their homework in class.

Because it is quite a pitfall that if you see something go wrong with one person, you zoom in completely on that person. Before you know it, you are working with them for five or six minutes and, supposing they have 15 minutes to work independently, then you have time to see two such students. Then my harvest is two or three students. If I base my data collection on those whiteboards and exit tickets or other formative elements, I get more of an overall picture, and I find that much more valuable.

Patricia emphasized that it is crucial to gather rich information (e.g., about existing misconceptions) instead of a count of how many questions were answered incorrectly. However, she also mentioned that it is not always evident whether a teacher collects rich data in a formative assessment plan: “I believe it is rich information because you certainly address the mistakes you saw and the corresponding underlying misconceptions. Nevertheless, that is so self-evident that it is not mentioned here.”

Data used to inform decisions at each checkpoint is collected in different ways. Most of the teachers planned to use combinations of data collection methods to get a complete overview of all students’ learning, but they did this in various ways. Some wanted to combine data collection at the checkpoints with planned or on-the-fly daily checks during the lessons before the checkpoint. For example, Stuart plans daily checks of specific lesson goals by using mini whiteboards and exit tickets in addition to formal checkpoints that reveal learning on the overarching learning objectives. Lisa planned data collection at the checkpoint together with data collection via an online tool that helps her follow how students did on their assignments in the previous lessons. This helps her gather all the information she needs to decide on the next best step after a checkpoint.

However, other teachers only collect data at the planned checkpoints, at which time they aim to gather rich information on all students by using multiple data collection methods simultaneously. Tracy, for instance, combined walking around the classroom while students worked on their assignments with gathering written peer feedback on the same assignment at her first checkpoint. At the second checkpoint, she combined online assignments with answers from mini whiteboards.

The final variation, mentioned by Bernadette and Tracy, was using checkpoints not to gather new information on learning but to bring together all relevant information on learning from all prior lessons until that moment. This can result in rich information and help teachers make formative decisions that have consequences for all students based on information from all students. For example, when they cannot let every student speak during a lesson, they combine evidence from multiple lessons to get a complete overview of students’ speech.

Experienced teachers recognize how difficult it is to find time to make room for adjustment and improvement in an overfull curriculum. Follow-up is the part of formative assessment that takes the most time and thus the first thing to skip when time is tight, some teachers said. However, Jenna also stated that this is the essential part, and the other teachers seemed to agree since they all have strategies to help them make room for adjustment and improvement.

Three teachers prepared different possible follow-ups in advance to help them act on the checkpoints’ outcomes immediately. Patricia, Stuart, and Bernadette prepared slides, instructions, tutorials, and/or assignments for students so they would be ready once they knew what was needed to advance students’ learning. In this preparation, they considered different possible outcomes and differences between students (e.g., they prepared assignments for students who had reached particular learning objectives and those who still needed to). Instead of only focusing on the students who did not reach a targeted learning objective, all the teachers planned room for adjustment and improvement for all students. Patricia and Tracy created common assignments for their students that would simultaneously advance the learning of students who had as well as students who had not yet reached a targeted learning objective.

Tracy and Jenna warned that when a curriculum contains too much material, teachers have less flexibility in reacting to outcomes that are different than expected. Therefore, Jenna, Patricia, and Stuart advised their fellow teachers to be aware of and look for possibilities to leave something out or create more room in the curriculum when planning their lessons. Stuart mentioned many examples of how he makes room for adjusting and improving teaching and learning. For example, he and Jenna plan checkpoints to help them decide whether they can skip (parts of) instruction. Additionally, Stuart creates plans that will help the few students who need it by assigning tutorials and extra assignments to complete at home, instead of taking a whole lesson for extra instruction and practice.

Patricia, Jenna, and Stuart also delay more advanced tasks until formative assessment shows that students are ready to take this next step. That gives them more room for adjustment and improvement on the basics at the start of a lesson plan. Jenna:

If you do not do that well, do not lay that foundation properly, you can start working on another subject soon. However, the students still have not mastered that, and next comes something that practically goes back to this, so the foundation must be set correctly.

Stuart even finds room for adjustment and improvement after the final test, since he plans room for student improvement in the successive lessons and in assignments for future lessons that take place after the chapters’ final test: “Then they understand that it is not just for now and the next test, but learning is continuous.” According to Stuart, giving students room for improvement could even mean moving a test to a later date. Bernadette, Stuart, Lisa, and Jenna also make sure students take responsibility for designing their own room for improvement by having students think about the best follow-up. Lisa also wants to give students information based on the checkpoints that they can use to make their own improvement plans.

This study focused on the research question: How do experienced teachers design formative assessment plans that are aligned, include decision-driven data collection, and leave room for adjusting and improving teaching and learning? Through the interviews with experienced teachers, seven strategies were found that they used to design formative assessment plans that meet these criteria:

To discover the difficulties teachers can encounter in designing formative assessment plans that are aligned, include decision-driven data collection, and leave room for adjusting and improving teaching and learning, sub-question 1 asked which pitfalls can threaten the design of formative assessment plans. One frequently found pitfall was that formative assessment plans were incomplete or unclear. A formative assessment plan needs to clearly describe, explicitly link, and consciously match learning objectives, lesson activities, and assessment in line with what Biggs (1996) defined as constructive alignment. Additionally, a formative assessment plan needs to clearly describe, explicitly link, and consciously match intended formative decisions, data collection and follow-up to meet the criteria of decision-driven data collection and room for adjustment and improvement.

The other pitfalls that were found were:

Sub-question 2 was aimed at discovering what experienced teachers do to design formative assessment plans that are aligned, include decision-driven data collection, and leave room for adjustment and improvement. The interviewed teachers mentioned seven design strategies that help them avoid most of the pitfalls listed above and two other pitfalls that they experienced during designing formative assessment. The design strategies will be presented in more detail per criterion with the implications for improving the design steps. All implications are shown in a revised version of the design steps in Online Resource 2. The pitfalls that these teachers did not yet find a solution for will also be presented together with the implications for future research.

The six interviewed teachers used three design strategies to ensure sufficient alignment in their formative assessment plans:

These findings have implications for design steps 1, 3, and 5:

The experienced teachers used two design strategies to design decision-driven data collection (Wiliam, 2013, 2014):

If data is consciously collected to match and inform the formative decisions, all these variations can be considered decision-driven data collection. The important overarching design strategy is that teachers combine information from multiple data collections to inform their formative decisions.

As for decision-driven data collection, the two strategies experienced teachers mentioned are already incorporated in the design steps. However, the importance of these strategies can be emphasized in design step 3 and 5 based on this study:

Most of the experienced teachers in this study acknowledged the difficulty of creating room for adjustment and improvement (Veugen et al., 2021). As a result, the three design strategies the teachers used to create room for adjustment and improvement focus on increasing the possibility to use the outcomes of the checkpoints:

Most teachers thought it was also important that students learned to make their own improvement plans and become co-responsible for the learning process.

As for the design steps, the design strategies for creating room for adjustment and improvement may lead to changes in design steps 4 and 5:

Apart from the design strategies, the interviews also disclosed that even experienced teachers face difficulties in designing formative assessment. The two pitfalls that were mentioned can make it hard for teachers to design and implement formative assessment plans that are aligned, include decision-driven data collection, and make room for adjustment and improvement despite of the strategies they already use.

The teachers reported that three formative decisions at the checkpoints were the foundation for their formative data collection. These decisions were limited to: “Can I go on with the next lesson/chapter/learning objective or is something else needed?” However, to ensure decision-driven data collection contributes to creating the best suitable follow-up for students, the decisions should go beyond “Can I go on or not” and include “What is the best way to move forward?” Therefore, the decisions that are the foundation of decision-driven data collection should have a double focus.

The first focus refers to the “yes or no” decision (e.g., “Can I go on to the next chapter?” or “Is it necessary to differentiate?”). There, teachers need to add a “how to continue” decision (e.g., “What is the best way to go forward?” or “How should I differentiate in the next lesson?”, respectively). When teachers incorporate both in their formative decisions, it is more likely that decision-driven data collection will provide the information needed to choose the follow-up that best suits students’ needs.

The second focus involves collaboration. Teachers can use conversations with their students to discover the best suitable follow-up (Allal, 2020). Formative assessment will achieve its full potential when it is perceived as support for development and learning and discovering how to suit students’ needs best rather than solely used for control and accountability and solely focused on the go or no go (Ninomiya & Shuichi, 2016).

While one teacher advocated for perceiving learning and formative assessment as a continuous process that transcends specific chapters or series of lessons, the other teachers often wondered “How much room for improvement can I give my students?” or “How can I justify continuous and differentiated learning processes that suit students’ needs and still achieve all the learning objectives with all my students within a specific time?”

The differences between these teachers and the choices they make can be explained in several ways. The teachers’ pedagogical foundation and their knowledge and skills regarding formative assessment can play a role, as can the amount of space they perceive to define and shape their work within the school context (i.e., their professional agency) (Heitink et al., 2016; Oolbekkink-Marchand et al., 2022). These differences can be used to explain why one experienced teacher creates room for improvement during a new chapter or delays a test, while others wonder how much room for improvement they can give their students before they must move forward to the next subject, lesson, or learning objective. The amount of space and freedom teachers experience to let formative assessment lead their teaching decisions and the role teachers’ agency and experience play in this process would be interesting subjects for future research.

A first limitation of this study was that the conclusions about sub-question 1 are based on formative assessment plans that were only a reality on paper. The outcomes of the analysis were based on the complete plans and the possible risks perceived in the incomplete plans. Since the formative assessment plans are merely a written plan rather than a reflection of action, they do not always show what a teacher really planned to do. During the interviews, it became clear that when teachers explained their formative assessment plans, a lot of information about them had not been written down but was still essential to comprehend what they planned to do. Since only six teachers were interviewed, it is possible that asking more teachers to explain their formative assessment plans might have led to fewer, more, or different pitfalls in designing formative assessment.

Another limitation of this study was the inclusion of only six experienced teachers, mainly from theoretical subjects. It is unclear whether less experienced teachers or teachers of more practical subjects would use the same or other design strategies to meet the three criteria. Future research could focus on collecting a range of design stories from experienced and new teachers, with or without prior knowledge and skills concerning formative assessment, of both theoretical and practical subjects.

In conclusion, it is interesting to note a paradox about formative assessment. The current study showed that when teachers consciously prepare, plan, and match their formative assessment in advance, this helps them to achieve alignment, decision-driven data collection, and room for adjustment and improvement. However, this contradicts Black and Wiliam’s (2009) definition, which emphasizes that formative assessment “is concerned with the creation of, and capitalization upon, ‘moments of contingency’” (p. 10). When formative assessment is extensively planned, are teachers still able to pick up on the element of surprise? Decision-driven data collection can present them with different outcomes than expected, so an unforeseen follow-up may be needed to best suit students’ needs. How can teachers keep an inquiring and open mind when they collect and analyze evidence of learning after they planned their formative decisions, data collection, and follow-ups in detail in advance?

Thus, it would be interesting for future research to discover how teachers use information about students’ learning to inform their decisions. Do they analyze this information with enough openness and curiosity to really discern students’ needs and adjust their planned follow-up based on unexpected outcomes?

The data that support the findings of this study are available from the corresponding author, [JS], upon reasonable request. The data will not become publicly available before the ending of the research project this study is connected to.

We thank all who contributed to completing this study. We give special consideration to the schools who are our committed partners in learning about and designing formative assessment plans and the teachers who took the time to share their formative assessment plans and design stories.

This work was supported by the Taskforce for Applied Research SIA, or Regieorgaan SIA (grant number RAAK.PRO03.057).

Janneke van der Steen: conceptualization, methodology, validation, formal analysis and investigation, data curation, writing — original draft preparation, visualization. Tamara van Schilt-Mol: conceptualization, methodology, supervision, validation, writing — review and editing, project administration, funding acquisition. Cees van der Vleuten: conceptualization, supervision, validation, writing — review and editing. Desirée Joosten-ten Brinke: conceptualization, supervision, validation, writing — review and editing.

Approval was obtained from the ethics committee of HAN University of Applied Sciences. The procedures used in this study adhere to the tenets of the Declaration of Helsinki. Informed consent was obtained from all individual participants in the study.

The authors declare no competing interests.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Below is the link to the electronic supplementary material.